- Novaneuron

- Posts

- LLMs with 'Private Training' - Part 2: The Tech

LLMs with 'Private Training' - Part 2: The Tech

How can you build a privately trained LLM to help businesses and individuals?

Part 1: The BusinessPart 1 discuss the business aspects of privately trained LLMs. Click to read » | Part 2: The techThis is Part 2, which discusses the technology aspects of building a privately trained LLM. |

Recap from Part 1…

What are LLMs with ‘private training’?

LLMs, or large language models, are versatile systems that can be applied to a wide range of tasks. They have the capability to be trained for specific use cases or with specific private data.

Finetuning an LLM

Large Language Models (LLMs) such as GPT-3 or GPT-4, initially trained on diverse internet text, possess a broad base of generalised knowledge. However, they might not excel in specific domains or tasks straight out of the box.

That's where fine-tuning comes in. This process involves further training these models on a specialised, smaller and task-specific dataset to adapt them to specific applications. Building a Privately Trained LLM

The core steps

Here's a six-step guide on creating your own privately trained LLM.

1. The Data

Identifying your data source is crucial. It could be a plethora of resources like internal documentation, emails, customer information, or business intelligence data. Each dataset serves different purposes, so your choice should align with the use case. It forms the basis of your LLM's learning and knowledge.

2. Pre-processing the data

Next, you need to process your data, which involves cleaning, structuring, and normalising it for machine-readability. Utilize Python libraries like Pandas and NumPy or preprocessing tools from frameworks like TensorFlow or PyTorch. This ensures your LLM can effectively learn from the data.

3. Training

Training is where your LLM starts absorbing knowledge. Using machine learning frameworks, you input the processed data into your model, which learns and adapts based on the given information. Ensure your training set is representative of the tasks you expect your LLM to perform.

4. Evaluation

Post-training, it's crucial to evaluate your LLM’s performance. Choose metrics relevant to your use case, such as accuracy for classification tasks, or BLEU score for translation tasks. Evaluation helps you understand your model's efficacy and points out the areas requiring improvement.

5. Deployment

Deployment is the stage where your LLM is integrated into your desired platform or application. You'll need a supportive infrastructure, which could be on-premises servers or cloud-based solutions like AWS, Google Cloud, or Azure. Your choice should align with your scalability requirements and data privacy policies.

6. Feedback and Iteration

The final step is to establish a robust feedback system. This might involve tracking user interactions, soliciting user feedback, or setting up automated monitoring systems. Use this feedback for continuous LLM improvement, iterating through the previous steps as necessary.

Choose your approach wisely!

The approach to be taken depends a lot on the use case, expertise and resources you have at your disposal.

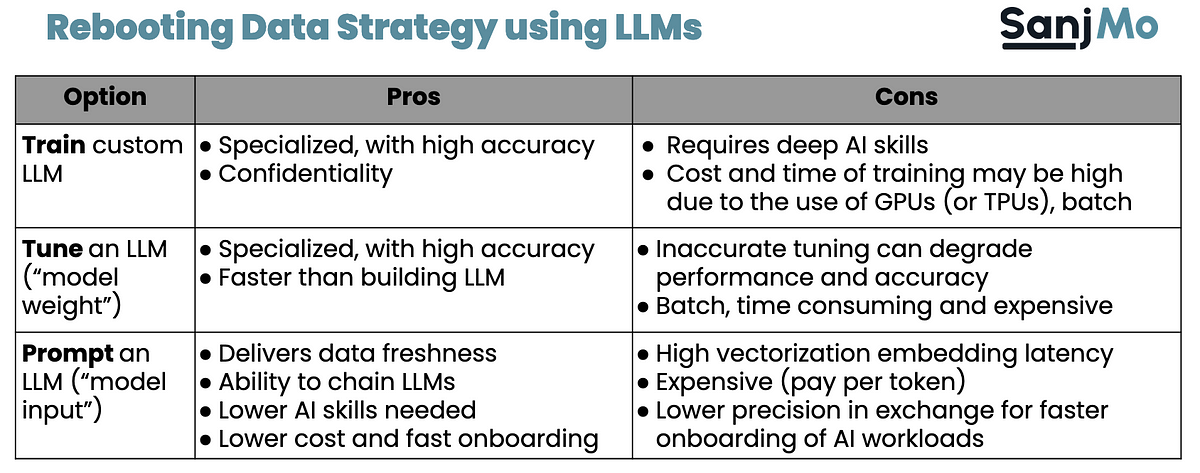

Let’s explore three possible pathways: Custom training, Fine-tuning, and smarter prompting of general-purpose LLMs.

1. Train a Custom LLM: A Tailored but Intensive Approach

Building a custom LLM might be the most challenging path, requiring significant resources and advanced AI expertise. This process involves designing and training an LLM from scratch, which is both data and tech-intensive. The substantial infrastructure needed for training a model as advanced as GPT-4, for example, might be beyond the means of many organisations.

However, the investment can be worth it. With this approach, you can create purpose-built models for specific tasks. For instance, you could train an LLM to classify Slack messages to identify personally identifiable information (PII). Such bespoke applications can offer distinct advantages over general-purpose models.

This approach is most suited for larger enterprises with advanced IT teams and the necessary resources to invest in AI infrastructure.

2. Fine-Tune a General Purpose LLM: Balancing Specificity and Accessibility

Fine-tuning a pre-existing, general-purpose LLM offers a balance between the customisation of a custom model and the accessibility of a ready-made one. In this process, you take a pre-trained model and 'fine-tune' it on your specific dataset, allowing the model to adapt to your particular use case.

Like custom training, fine-tuning requires a deep understanding of AI and an investment in infrastructure. However, it is typically less resource-intensive than creating a model from scratch. This method can be a smart choice for organisations with significant data and IT resources, but who are not quite ready to dive into full-scale custom LLM training.

3. Prompt a General Purpose LLM in a Smarter Way: The Beginner's Gateway

Prompting a general-purpose LLM in a smarter way is an accessible option for organisations making their initial foray into the world of generative AI. This approach involves feeding context into an LLM via APIs, guiding it to generate more useful outputs. The model inputs need to be converted into vectors, a process we'll discuss in a later section.

This strategy requires fewer resources and less AI expertise than the other two methods. While it may not offer the same level of customisation, it can be a powerful tool in the right hands. It’s an excellent starting point for organisations with modest IT skills and resources, looking to leverage AI's capabilities.

Courtesy of the base idea of this classification is from this amazing article from Sanjmo. For deeper technology explorations, please visit his blog here.

UPDATE: OpenAI opened fine-tuning capability to its models after this post was published. Here is our take on fine-tuning as an alternate approach to private training of LLMs.

Concluding…

Huge opportunities await for unlocking in building applications, or simply using these applications in your organisation.

Here’s to a brave new world!

For queries, comments, or simple brainstorming, write to us at [email protected]

This article just scratched the surface when it comes to the potential of the many applications you can build! Check out the below article for more ideas!